“MicroMV 人脸识别”的版本间的差异

| 第1行: | 第1行: | ||

=='''基本原理'''== | =='''基本原理'''== | ||

*MicroMV捕捉人脸获取人脸坐标 | *MicroMV捕捉人脸获取人脸坐标 | ||

| − | |||

| − | |||

=='''MicroMV的代码准备'''== | =='''MicroMV的代码准备'''== | ||

| − | |||

| − | |||

<source lang="py"> | <source lang="py"> | ||

| − | + | # Face Detection Example | |

| − | + | # | |

| − | + | # This example shows off the built-in face detection feature of the OpenMV Cam. | |

| − | + | # | |

| − | + | # Face detection works by using the Haar Cascade feature detector on an image. A | |

| − | + | # Haar Cascade is a series of simple area contrasts checks. For the built-in | |

| − | + | # frontalface detector there are 25 stages of checks with each stage having | |

| − | + | # hundreds of checks a piece. Haar Cascades run fast because later stages are | |

| − | + | # only evaluated if previous stages pass. Additionally, your OpenMV Cam uses | |

| − | + | # a data structure called the integral image to quickly execute each area | |

| − | + | # contrast check in constant time (the reason for feature detection being | |

| − | + | # grayscale only is because of the space requirment for the integral image). | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

import sensor, time, image | import sensor, time, image | ||

| − | + | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

# Reset sensor | # Reset sensor | ||

sensor.reset() | sensor.reset() | ||

| + | |||

# Sensor settings | # Sensor settings | ||

sensor.set_contrast(1) | sensor.set_contrast(1) | ||

sensor.set_gainceiling(16) | sensor.set_gainceiling(16) | ||

# HQVGA and GRAYSCALE are the best for face tracking. | # HQVGA and GRAYSCALE are the best for face tracking. | ||

| − | sensor.set_framesize(sensor. | + | sensor.set_framesize(sensor.HQVGA) |

sensor.set_pixformat(sensor.GRAYSCALE) | sensor.set_pixformat(sensor.GRAYSCALE) | ||

| − | + | ||

# Load Haar Cascade | # Load Haar Cascade | ||

# By default this will use all stages, lower satges is faster but less accurate. | # By default this will use all stages, lower satges is faster but less accurate. | ||

face_cascade = image.HaarCascade("frontalface", stages=25) | face_cascade = image.HaarCascade("frontalface", stages=25) | ||

print(face_cascade) | print(face_cascade) | ||

| + | |||

# FPS clock | # FPS clock | ||

clock = time.clock() | clock = time.clock() | ||

| − | + | ||

while (True): | while (True): | ||

clock.tick() | clock.tick() | ||

| + | |||

# Capture snapshot | # Capture snapshot | ||

img = sensor.snapshot() | img = sensor.snapshot() | ||

| + | |||

# Find objects. | # Find objects. | ||

# Note: Lower scale factor scales-down the image more and detects smaller objects. | # Note: Lower scale factor scales-down the image more and detects smaller objects. | ||

# Higher threshold results in a higher detection rate, with more false positives. | # Higher threshold results in a higher detection rate, with more false positives. | ||

objects = img.find_features(face_cascade, threshold=0.75, scale_factor=1.25) | objects = img.find_features(face_cascade, threshold=0.75, scale_factor=1.25) | ||

| + | |||

# Draw objects | # Draw objects | ||

for r in objects: | for r in objects: | ||

| − | |||

img.draw_rectangle(r) | img.draw_rectangle(r) | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | # Print FPS. | |

| − | + | # Note: Actual FPS is higher, streaming the FB makes it slower. | |

| − | # | + | print(clock.fps()) |

| − | # | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

</source> | </source> | ||

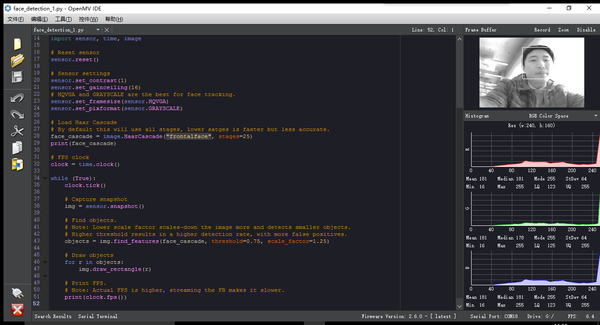

| + | 效果如下: | ||

| + | [[File:microMVGettingStart8.png||600px|center]] | ||

2018年5月16日 (三) 05:25的版本

基本原理

- MicroMV捕捉人脸获取人脸坐标

MicroMV的代码准备

# Face Detection Example

#

# This example shows off the built-in face detection feature of the OpenMV Cam.

#

# Face detection works by using the Haar Cascade feature detector on an image. A

# Haar Cascade is a series of simple area contrasts checks. For the built-in

# frontalface detector there are 25 stages of checks with each stage having

# hundreds of checks a piece. Haar Cascades run fast because later stages are

# only evaluated if previous stages pass. Additionally, your OpenMV Cam uses

# a data structure called the integral image to quickly execute each area

# contrast check in constant time (the reason for feature detection being

# grayscale only is because of the space requirment for the integral image).

import sensor, time, image

# Reset sensor

sensor.reset()

# Sensor settings

sensor.set_contrast(1)

sensor.set_gainceiling(16)

# HQVGA and GRAYSCALE are the best for face tracking.

sensor.set_framesize(sensor.HQVGA)

sensor.set_pixformat(sensor.GRAYSCALE)

# Load Haar Cascade

# By default this will use all stages, lower satges is faster but less accurate.

face_cascade = image.HaarCascade("frontalface", stages=25)

print(face_cascade)

# FPS clock

clock = time.clock()

while (True):

clock.tick()

# Capture snapshot

img = sensor.snapshot()

# Find objects.

# Note: Lower scale factor scales-down the image more and detects smaller objects.

# Higher threshold results in a higher detection rate, with more false positives.

objects = img.find_features(face_cascade, threshold=0.75, scale_factor=1.25)

# Draw objects

for r in objects:

img.draw_rectangle(r)

# Print FPS.

# Note: Actual FPS is higher, streaming the FB makes it slower.

print(clock.fps())

效果如下: