“MicroMV 人脸识别”的版本间的差异

| 第1行: | 第1行: | ||

*<p style="font-size:140%">'''基本原理'''</p> | *<p style="font-size:140%">'''基本原理'''</p> | ||

| − | MicroMV捕捉人脸获取人脸坐标 | + | *MicroMV捕捉人脸获取人脸坐标 |

| − | 通过pid.py算法计算出舵机将要转动的角度,并通过串口发送给microduino | + | *通过pid.py算法计算出舵机将要转动的角度,并通过串口发送给microduino |

| − | microduino接收数据后控制舵机 | + | *microduino接收数据后控制舵机 |

== 1、快速上手<br /> == | == 1、快速上手<br /> == | ||

2018年5月16日 (三) 03:29的版本

基本原理

- MicroMV捕捉人脸获取人脸坐标

- 通过pid.py算法计算出舵机将要转动的角度,并通过串口发送给microduino

- microduino接收数据后控制舵机

1、快速上手

把pid.py复制到MicroMV生成的U盘空间中

pid.py:

from pyb import millis

from math import pi, isnan

class PID:

_kp = _ki = _kd = _integrator = _imax = 0

_last_error = _last_derivative = _last_t = 0

_RC = 1/(2 * pi * 20)

def __init__(self, p=0, i=0, d=0, imax=0):

self._kp = float(p)

self._ki = float(i)

self._kd = float(d)

self._imax = abs(imax)

self._last_derivative = float('nan')

def get_pid(self, error, scaler):

tnow = millis()

dt = tnow - self._last_t

output = 0

if self._last_t == 0 or dt > 1000:

dt = 0

self.reset_I()

self._last_t = tnow

delta_time = float(dt) / float(1000)

output += error * self._kp

if abs(self._kd) > 0 and dt > 0:

if isnan(self._last_derivative):

derivative = 0

self._last_derivative = 0

else:

derivative = (error - self._last_error) / delta_time

derivative = self._last_derivative + \

((delta_time / (self._RC + delta_time)) * \

(derivative - self._last_derivative))

self._last_error = error

self._last_derivative = derivative

output += self._kd * derivative

output *= scaler

if abs(self._ki) > 0 and dt > 0:

self._integrator += (error * self._ki) * scaler * delta_time

if self._integrator < -self._imax: self._integrator = -self._imax

elif self._integrator > self._imax: self._integrator = self._imax

output += self._integrator

return output

def reset_I(self):

self._integrator = 0

self._last_derivative = float('nan')

把face_detection1024.py替换到MicroMV生成的U盘空间中的main.py做为主程序

face_detection1024.py:

import sensor, time, image

from pyb import UART

from pid import PID

from pyb import LED

import json

red_led = LED(1)

green_led = LED(2)

blue_led = LED(3)

ir_led = LED(4)

pan_pid = PID(p=0.42, i=0.01, imax=90)

tilt_pid = PID(p=0.22, i=0.005, imax=90)

pan_servo_pos=90

tilt_servo_pos=90

pan_center = 60

tilt_center = 40

# Reset sensor

sensor.reset()

# Sensor settings

sensor.set_contrast(1)

sensor.set_gainceiling(16)

# HQVGA and GRAYSCALE are the best for face tracking.

sensor.set_framesize(sensor.HQQVGA)

sensor.set_pixformat(sensor.GRAYSCALE)

sensor.set_brightness(-3)

# Load Haar Cascade

# By default this will use all stages, lower satges is faster but less accurate.

face_cascade = image.HaarCascade("frontalface", stages=25)

print(face_cascade)

# FPS clock

clock = time.clock()

uart = UART(4, 115200)

while (True):

clock.tick()

# Capture snapshot

img = sensor.snapshot()

# Find objects.

# Note: Lower scale factor scales-down the image more and detects smaller objects.

# Higher threshold results in a higher detection rate, with more false positives.

objects = img.find_features(face_cascade, threshold=0.75, scale_factor=1.25)

# Draw objects

for r in objects:

red_led.on()

img.draw_rectangle(r)

pan_center = int(r[0]+r[2]/2)

tilt_center = int(r[1]+r[3]/2)

img.draw_cross(pan_center, tilt_center)

pan_error = pan_center-img.width()/2

tilt_error = tilt_center-img.height()/2

pan_output=pan_pid.get_pid(pan_error,1)/3

tilt_output=tilt_pid.get_pid(tilt_error,1)

pan_servo_pos=int(pan_servo_pos-pan_output)

tilt_servo_pos=int(tilt_servo_pos+tilt_output)

if pan_servo_pos>=170:

pan_servo_pos=170

if pan_servo_pos<=10:

pan_servo_pos=10

if tilt_servo_pos>=160:

tilt_servo_pos=160

if tilt_servo_pos<=30:

tilt_servo_pos=30

output_str="[%d,%d,%d]" % (pan_servo_pos,tilt_servo_pos,r[2])

print('you send:',output_str)

uart.write(output_str+'\n')

red_led.off()

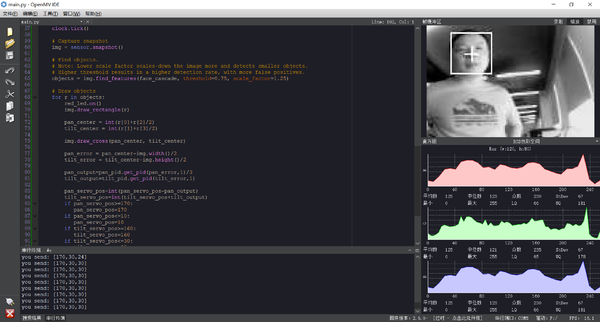

IDE运行效果为

屏幕中有人脸就会用方框注明

2、知识延伸

颜色空间

函数:

• 函数说明